GOAL

A key property of the brain’s computation is neural processing of visual information. The human brain can rapidly recognize thousands of objects while using less power than modern computers use in “quiet mode”. A potential key component enabling this remarkable performance is the use of sparse representations – at any given moment, only a tiny fraction of the visual neurons fire. In this proposed research we wish to

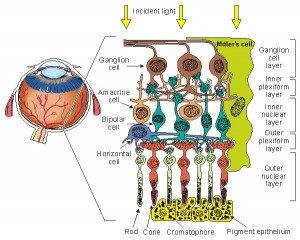

Figure 2: An illustration of the computation done in the retina. Significant sparsification is performed in the analog domain. We plan to pursue a similar strategy in our research.

use machine learning tools and advanced optimization techniques in order to learn sparse, hierarchical representations that can enable real-time, high-quality visual recognition. The work goes far beyond the standard “dictionary learning” for low-level image patches in that it seeks to go all the way from the analog signal to high-level recognition with sparse, hierarchical representations, and to develop novel, efficient algorithms that can take advantage of the sparsity in the representation.

The research team includes experts in machine learning, sparse optimization, sampling, neural computation and object recognition The research team is lead by Prof. Yair Weiss and Prof. Daphna Weinshall (HUJI), the team will develop new algorithms for learning sparse, hierarchical representations and for using these representations in visual recognition on a small power budget.

The main outcomes of this research project are algorithms for learning sparse hierarchical representations and ways for using these representations in visual recognition on a small power budget. Most of the first year will be used for initial sketches of the approach including the development of rudimentary algorithmic solutions, while subsequent years will be used to continuously improve the approaches using a cycle of experimentation and theory.

- I. Eyal, F. Junqueira, and I. Keidar, “Thinner Clouds with Preallocation”. HotCloud’13.

Yair Weiss ➭

Daphna Weinshall ➭

- A. Zweig and D. Weinshall, “Hierarchical Regularization Cascade for Joint Learning”, In Proceedings of 30th International Conference on Machine Learning (ICML), Atlanta GA, June 2013.

- A. Zweig and D. Weinshall, “Hierarchical Multi-Task Learning: a Cascade Approach Based on the Notion of Task Relatedness”, ICML 2013.

Yonina Eldar ➭

- Y. Shechtman, A. Beck and Y. C. Eldar, “GESPAR: Efficient Phase Retrieval of Sparse Signals”, arXiv:1301.1018 , to appear in Trans. On Signal Processing.

- A. Beck and Y. C. Eldar, “Sparsity Constrained Nonlinear Optimization: Optimality Conditions and Algorithms”,SIAM Optimization, vol. 23, issue 3, pp. 1480-1509, Oct. 2013.

- Y. C. Eldar and S. Mendelson,“Phase Retrieval: Stability and Recovery Guarantees”, Applied and Computational Harmonic Analysis, Elsevier, Sep. 2013

- Zhao Tan, Student, IEEE, Yonina C. Eldar, Fellow, IEEE, Amir Beck and Arye Nehorai, Fellow, IEEE, “Smoothing and Decomposition for Analysis Sparse Recovery”

- José Bioucas Dias, Deborah Cohen, Yonina C. Eldar, “CoVALSA: Covariance Estimation From Compressive Measurements Using Alternating Minimization”, Eusipco 2014, the 22nd european signal processing conference.

- D. Cohen and Y. C. Eldar. “Sub-Nyquist Sampling for Power Spectrum Sensing in Cognitive Radios: A Unified Approach”, Submitted to IEEE Transactions, Aug. 2013.

- Y. C. Eldar and I. Keidar, “Distributed Compressed Sensing For Static and Time-Varying Networks, S. Patterson”, Submitted to IEEE Transactions, Aug. 2013.

Ron Meir ➭

Amnon Shashua ➭